Issue: DATE column as DT_WSTR in SSIS in lookup February 20, 2017

Posted by furrukhbaig in SSIS.Tags: DATE to DT_WSTR, OLEDB Provider for SQL Server, SQL Native 11.1 provider, SSIS

add a comment

It appears that OLEDB provider for SQL Server is incorrectly mapping DATE column from SQL SERVER into DT_WSTR (wide string). Same query using SQL native provider 11.1 (SQLNCLI11.1) is correctly mapped as DT_DBDATE column.

Connection String for SQL Native 11.1 :

Data Source=(local);Initial Catalog=Test_DB;Provider=SQLNCLI11.1;Integrated Security=SSPI;Auto Translate=False;

Connnection String for OLEDB Provider for SQL Server

Data Source=(local);Initial Catalog=Test_DB;Provider=SQLOLEDB;Integrated Security=SSPI;Auto Translate=False;

If you have to use OLEDB provider for other benefits then you must type cast data using Data Conversion component. In my case I was trying to use it with LOOKUP component and therefore could not change datatype or use data conversion component or change column metadata in advance properties, I ended up using SQL Native client 11.1 provider for my problem but for OLEDB Source component I would prefer using Data Conversion component instead.

Hopefully it will help someone. Happy SSIS’ing

Update Statistics after INDEX Rebuild November 17, 2014

Posted by furrukhbaig in Execution Plan.Tags: execution plan, FULLSCAN, Performance Tuning, REBUILD INDEX, scan statistics, statistics, UPDATE STATISTICS

add a comment

Its a common mistake during overnight maintenance job that we rebuild indexes and then update statistics. Its something commonly overlooked. Update statistics uses sample rows by default while rebuild index will update statistics with full scan as part of index rebuild. If we don’t specify FULLSCAN option with update statistics it will overwrite full scan statistics with sampled statistics which may mean completely different/inefficient query execution plan. Even if you update statistics with full scan its still waste of resources as rebuild already did that.

I hope it will help someone safe couple of minutes during overnight maintenance window 😉

Enjoy.

Installing Oracle Oledb Provider for SQL Server 2008 R2 September 3, 2012

Posted by furrukhbaig in Oracle Oledb Provider, SQL Server 2008 R2, SSIS.Tags: Oracle 64 bit SSIS, Oracle Oledb SSIS, Oralce OLEDB Provider, SQL server 2008 R2

1 comment so far

I have found this very useful post to solve classic problem of getting data from oracle using SSIS as BID needs 32 bit driver for design time and runtime require 64 bit (on 64 bit machines).

I myself will try to do it as need to configure SQL Server 2008 R2 machine to pull data from Oracle database using SSIS. I am bit exicited as in past had tons of issues with Oracle connectivity from SSIS.

Processing poorly formatted large text file with SSIS February 28, 2012

Posted by furrukhbaig in SQL Server 2005, SSIS.Tags: csv parsing, ssis script component, ssis text file, text file parsing

add a comment

I have been working on a project where I am processing large comma seperated text files (2 GB, containing 20 million rows) having comma as a value in Last column. Using flat file data source does not solve the problem alone and I had to use script component to cater the open format value in last column which can contain comma within the value.

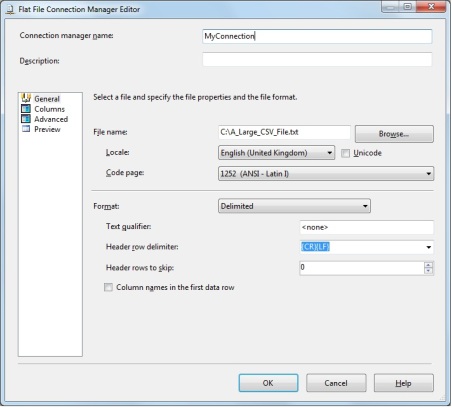

First you would need to define a connection as per following connection dialogue.

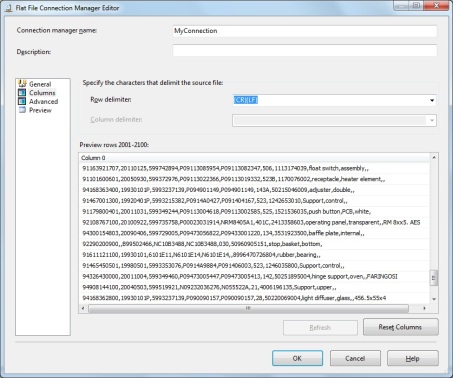

Then click on columns on left pane. The trick here is to select any thing other than CRLF as COLUMN DELIMITER and go back to first screen by clicking General and come back to this screen.

You will only see one column containing whole row. Please note you would need to move away from this screen after selecting another delimeter in order to see on column with whole row.

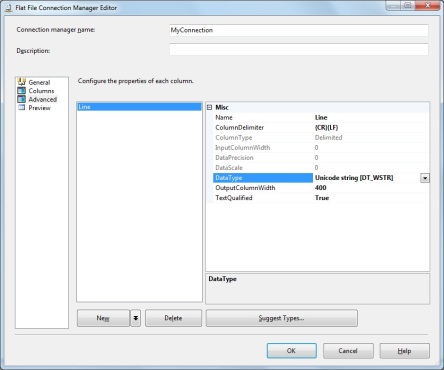

Now click on Advance and name your column anything you like, but I am using column name as Line as it contains whole line from the text file. Make sure you select String (STR) or Unicode String (WSTR) data type with maximum possible row length.

Now drag Data Flow Task on control Flow and double click on it

Now drag Flat File Source component on Data Flow designer

Select the connection for the Flat File Source component that we have created in steps above.

Flat File Source should only show one column as per connection manager.

Now Drag Script Component on Data Flow Designer and it will show you the dialogue box to select how this script component going to work. Select Transformation here.

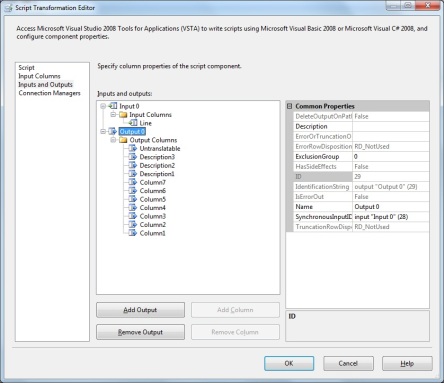

Click on Inputs and Outputs. Input should already have one column as defined in Flat File Source. Add columns for Output. I have added 11 columns and for demonstration purpose change the name of columns. Note “Untranslatable” column that will contain open format value. Be careful with column data type and allow enough length as per values expected.

Now its time to write some code in script component.

public override void Input0_ProcessInputRow(Input0Buffer Row)

{

char[] delimiter = “,”.ToCharArray();

string[] tokenArray = Row.Line.Split(delimiter, 11);

Row.Column1= tokenArray[0];

Row.Column2= tokenArray[1];

Row.Column3= tokenArray[2];

Row.Column4= tokenArray[3];

Row.Column5= tokenArray[4];

Row.Column6= tokenArray[5];

Row.Column7= tokenArray[6];

Row.Description1 = tokenArray[7];

Row.Description2 = tokenArray[8];

Row.Description3 = tokenArray[9];

Row.Untranslatable = tokenArray[10];

}

Now let me say that I am not the expert of C Sharp or .Net but I know how to get the work done 😉

The tricky part is SPLIT function which does the magic. Note the second parameter for no of array element expected (no of column expected). This is vital otherwise SPLIT will create variable no of array element depending on number of delimiter occurance in Line.

Our target is to get 11 column and if delimiter occur more than 10 time in one row it will append rest of the line in last column.

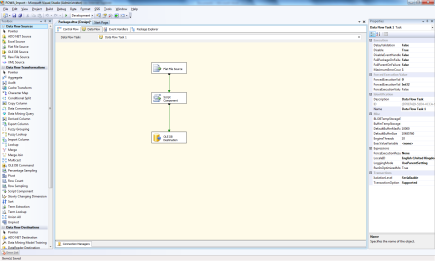

now you can do the OLE DB destination. your data flow should look like screen below.

I would appriciate any comments but this code processed 2 GB text file in less than a minute. loading 20 million rows in table.

Thanks

Who is Active ? October 25, 2010

Posted by furrukhbaig in DMV's, Execution Plan, Optimize, Queries running on the server, sp_who, sp_who2, sp_whoisactive, system monitoring, Tips, XML Execution Plan.Tags: dmv, optimize, sp_who, sp_who2, sp_whoisactive, system monitoring, xml execution plan

add a comment

Adam Machanic has released new build of system monitoring stored procedure SP_WhoIsActive. Its really useful and I would like to congratulate him for his efforts.

check out the link below for his original post and to download the code.

Enjoy !!

GO – Run a batch multiple times March 26, 2009

Posted by furrukhbaig in SQL Server 2005.Tags: GO batch, GO Statement, Run a batch multiple times, SQL Server, SQL Server Tips, undocumented sql feature

1 comment so far

Today I have come across the blog of Kalen Delaney using an undocumented feature of GO statement (batch terminator).

You can run a batch multiple time without re-runing batch or using WHILE loop.

Following code is taken from the original post. see the post below.

So, to run the INSERT 5 times, I would do this:

INSERT INTO details

(SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID,

UnitPrice, UnitPriceDiscount, rowguid, ModifiedDate)

SELECT SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID,

UnitPrice, UnitPriceDiscount, rowguid, ModifiedDate

FROM Sales.SalesOrderDetail

GO 5

Rowcount for Large Tables July 24, 2008

Posted by furrukhbaig in DMV's, Performance, SQL Server 2005, SQL Server 2005 features, Tips, TSQL.Tags: large tables, rowcount, rowcount scan, sys.dm_db_partition_stats

15 comments

Ever wondered why simple statements like SELECT COUNT(*) FROM [Table_Name] takes forever to return row count on large tables? Its because it uses full table scan to count number of rows. The instant way to get the row count on any table is to query new Dynamic Management Views (DMV) in SQL Server 2005 sys.dm_db_partition_stats. DMV contains row count and page counts for any table including all the partitions. Please note even you did not create partition for your table, your table still going to be created on single default partition so this will include all the tables.

Following are some useful queries that will return row count almost instantly. In SQL Server 2000 sysindexes system table is used to get the number of rows for large table to avoid full table scan.

Comments are welcome.

— All the tables having ZERO rows

— compatable with partitioned tables

SELECT

Table_Name = object_name(object_id),

Total_Rows = SUM(st.row_count)

FROM

sys.dm_db_partition_stats st

GROUP BY

object_name(object_id)

HAVING

SUM(st.row_count) = 0

ORDER BY

object_name(object_id)

— Row Count without doing full table Scan

— This will include provide total number of rows in all partition (if table is partitioned)

SELECT

Total_Rows= SUM(st.row_count)

FROM

sys.dm_db_partition_stats st

WHERE

object_name(object_id) = ‘Your_Table_Name_Goes_Here’

AND (index_id < 2) — Only Heap or clustered index

SSMS slow startup March 7, 2008

Posted by furrukhbaig in Performance, slow start, SQL Server 2005, SSMS, Tips.Tags: slow ssms, sql server management studio slow, ssms slow startup

add a comment

Friend of mine asked me “why SSMS is taking up to a minute to startup” on his office machine and I was not able to figure out the problem untill I find the post on Euan Garden’s Blog.

http://blogs.msdn.com/euanga/archive/2006/07/11/662053.aspx

The quick fix is to add following lines in host file

# entry to get around diabolical Microsoft certificate checks

# which slow down non internet connected computers

127.0.0.1 crl.microsoft.com

This is to do with certificate validity check. It fix the problem with my friends machine. Hope it helps others too.

Enjoy !!

Find out whats running on SQL Server 2005 December 13, 2007

Posted by furrukhbaig in DMV's, Execution Plan, Optimize, Performance, Performance Tuning, Profiler, Queries running on the server, SQL Server 2005, SQL Server 2005 features, XML Execution Plan.Tags: dmv, execution plan, profiler, queries running on server, whats running on sql server

2 comments

Everyone wants to know whats running on the box. Specially if your job is to stabalise server you always concern what is killing the box.

A Friend of mine has published very usefull post with scripts to find out whats running on the SQL Server with their execution plan.

http://www.proteanit.com/b/2007/01/22/a-useful-script-to-analyse-current-activity-on-your-box/

The same can also be managed if you run profiler and capture XML execution plan which is not always possible due to security issue and overhead of profiler itself.